Meta, the parent company of Instagram, Facebook, and Threads, announced Tuesday that it will discontinue its third-party fact-checking program in favor of a Community Notes system, modeled after the approach used on X.

An analysis by the FCAS Command Center found that since adopting such a community-based moderation system, X accounts spreading conspiracy theories and hate have actually gained increased engagement and reach.

Decision Sparks Controversy

Meta’s decision has sparked debate. Supporters argue that it advances free speech and claim Meta has previously engaged in unfair censorship, with some alleging a bias against pro-Palestinian content. However, critics, particularly from marginalized communities, warn that the change shifts the burden of moderating hate speech onto those most affected by it.

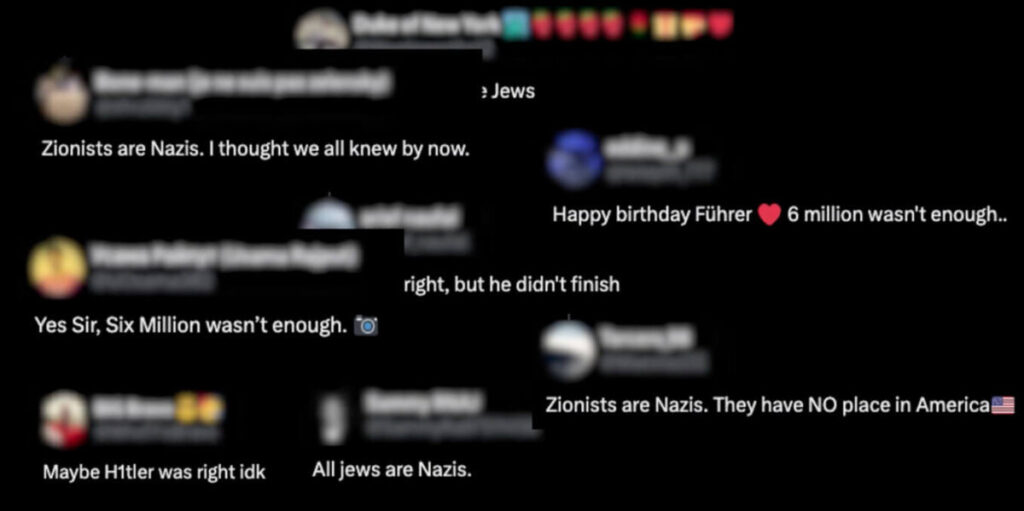

Community Notes, introduced on X in December 2022 after Elon Musk’s acquisition, relies on user-driven moderation to flag and debunk misinformation. However, since its implementation, users have reported a rise in extremist content, hate speech, and conspiracy theories.

Impact of Community Notes

FCAS data reveals that the risks of moving to a Community Notes system are significant. After the change on X in December 2022, accounts spreading conspiracy theories and antisemitic content became more active and reached significantly larger audiences.

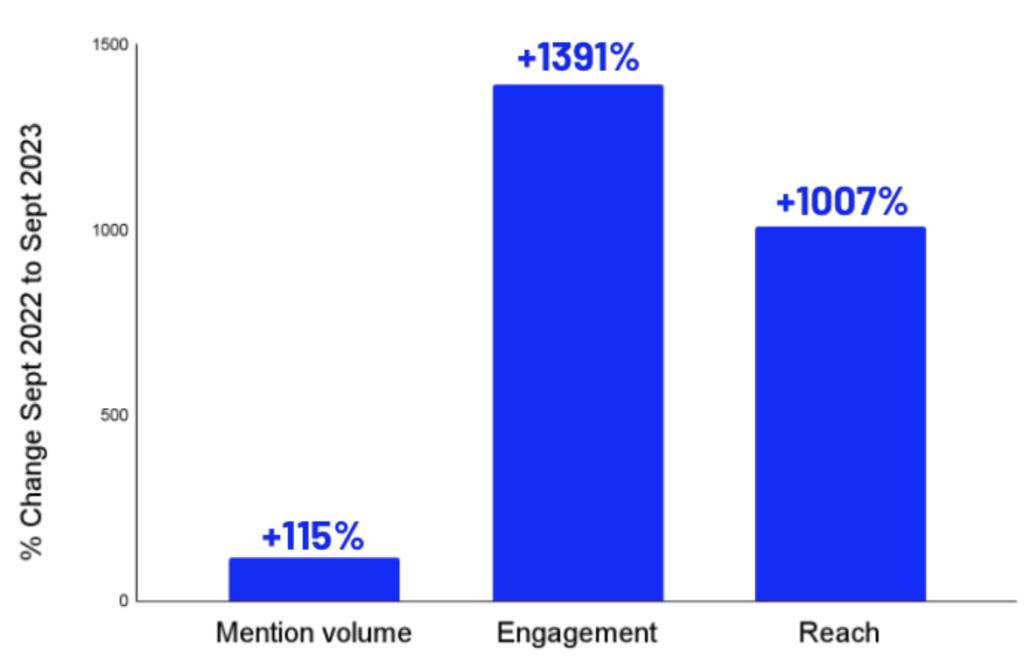

Examining eight such accounts—each flagged by Community Notes at least once—we compared post volume, engagement, and reach from September 2022 to September 2023. While post frequency increased by 115%, engagement surged nearly 1,400%, and reach grew by over 1,000%, suggesting that community-driven moderation may unintentionally amplify harmful content.

For instance, the X account Illuminatibot, which promotes conspiracy theories about the “Illuminati” and “New World Order,” showed minimal change in post volume but saw a 947% increase in reach year over year, peaking in August 2023.

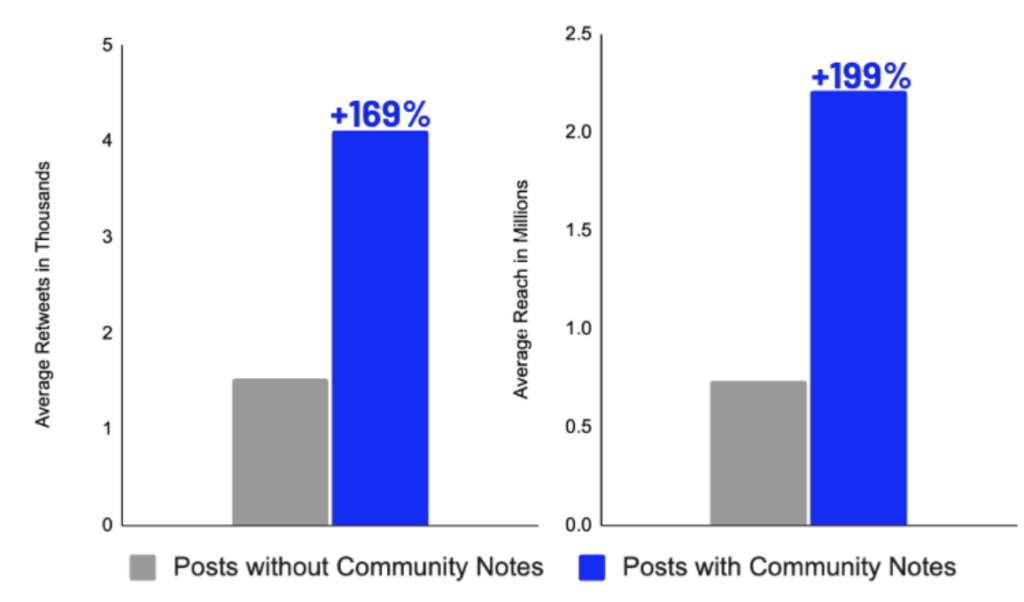

Additionally, we sought to isolate Illuminatibot’s tweets in September 2023 that received Community Notes to test if they received more engagement and reach than their other tweets. We found that tweets that were tagged with Community Notes received on average 169% more engagement and 199% more reach.

In other words, the tweets that are more controversial, thus receiving a Community Note, are getting amplified rather than being removed by content moderation.

While this does not establish direct causation, the data suggests that community-based moderation may contribute to greater exposure of harmful content rather than limiting its spread. Meta’s decision could lead to the same result.